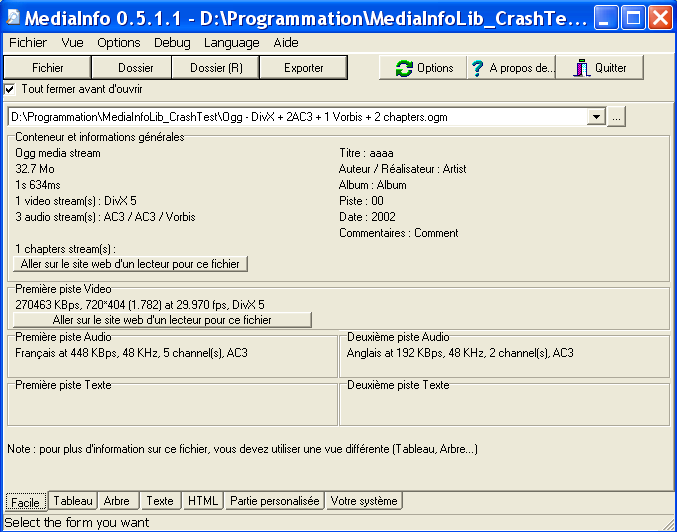

You should use the mediainfo tool (from the omonymous Debian package) to inspect the the video file and discover the audio format, then use the proper file extension. The extension used as destination file does matter for ffmpeg, if you use a wrong one you may get the error message Unable to find a suitable output format.

#Using mediainfo only on videos mp4#

With this two commands we will extract AAC audio from an MP4 file, and then convert it into a 16 bit WAV file at 48 KHz:įfmpeg -i video.mp4 -vn -c:a copy audio.m4aįfmpeg -i audio.m4a -vn -c:a pcm_s16le -ar 48000 avaudio.wav The use of MPEG-TS clips allowed the montage of the final video by just concatenating them. The gamma correction for the three RGB channels was determined with the GIMP, using the Colors ⇒ Levels ⇒ Pick the gray point for all channels tool. preset veryslow -profile:v main -level:v 4.2 -pix_fmt yuvj420p \ INPUT= "$1" OUTPUT= " $INPUT.eq.ts" EQ_FILTER= "eq=saturation=0.88:gamma_r=0.917:gamma_g=1.007:gamma_b=1.297" # Produces MPEG segments like the ones produced by the SJCAM SJ8Pro: ffmpeg -i " $INPUT" \ #!/bin/sh # Re-encode video clips in MPEG transport stream (MPEG-TS) format applying # some saturation and gamma correction.

To re-encode each clip we used the following ffmpeg recipe: The video clips were extracted from the original MP4 container as MPEG-TS snippets containing only video (no audio). It is possibile to re-encode all the video clips applying an equalization filter keeping all the encoding paramteres as similar as possibile to the original ones.

#Using mediainfo only on videos pro#

We had some video clips recorded with an SJCAM Sj8 Pro camera with a bad color balance and saturation due some bad tables loaded into the firmware. "2022-05_balcani.mkv" Re-encoding with tonal correction vcodec 'libx264' -pix_fmt 'yuvj420p' -preset 'veryslow' -tune 'film' -profile:v 'high' -level:v 5 \ metadata:s:a: 1 title= "Audio in presa diretta" \ metadata:s:a: 0 title= "Accompagnamento musicale" \ metadata title= " $TITLE" -metadata:s:v: 0 title= " $TITLE" \ i 'audio-music.ogg' -i 'audio-live.ogg' \ #!/bin/sh TITLE= "Balcani, maggio 2022" ffmpeg \ For a command line only solution you can consider ffmpeg to perfomr the re-encoding and to make the merge (mux) all into a Matroska container. We can use Avidemux to make the final rendering (re-encoding).

Preset: slow (or less), Tuning: film, Profile: High, IDC Level: AutoĪverage Bitrate (Two Pass), Average Bitrate 4096 kb/s (about 1.8 Gb per hour) Y = int ( float (y1 ) + ( float (y2 ) - float (y1 ) ) * percent )Ĭrop = '%dx%d+%d+%d!' % (w, h, x, y ) if stdout_mode:Ĭmd = else:Ĭmd = print ' '. X = int ( float (x1 ) + ( float (x2 ) - float (x1 ) ) * percent ) H = int ( float (h1 ) + ( float (h2 ) - float (h1 ) ) * percent ) W = int ( float (w1 ) + ( float (w2 ) - float (w1 ) ) * percent ) split ( 'x| \+', geom2 )įrames = ( int ( round (duration_s * float (fps ) ) ) - 1 ) for f in range ( 0, (frames + 1 ) ): Stdout_mode = True (geom1, geom2 ) = geometry. exit ( 1 ) # Geometry: "WxH+X+Y" => Width, Height, X (from left), Y (from top) path, re, subprocess, sys if len ( sys. #!/usr/bin/python # Make a batch of images to create a Ken Burst effect on a source image. The Ken Burst effect is specified by two geometry which identify the start and the end position into the image itself. It sends a batch of images to the stdout, making the Ken Burst effect on a image, of the specified duration. This is the kenburst-1-make-images Python script. This non-zero PTS should not pose any problem in concatenating video clips. It is a common practice to produce videos with the PTS (Presentation Time Stamp) of the first frame greather than zero otherwise a subsequent B-Frame may eventually requires a negative Decode Time Stamp (DTS), which is bad. 127920), which is different from the videos produced by the XiaoYi camera. NOTICE: The resulting video clips have a start_pts greather than zero (e.g. STILL_IMG= "_stillimage.$$.jpg" # NOTICE: Put -ss option after -i if cut is not frame precise (slower)! ffmpeg -loglevel fatal -ss " $STILLFRAME" -i " $INPUT_FILE" -vframes 1 -f image2 " $STILL_IMG" ffmpeg -loglevel error -y -f lavfi -i 'anullsrc=sample_rate=48000' \

STILLFRAME= "$2" # Frame to extract MM:SS.DDD. #!/bin/sh -e INPUT_FILE= "$1" # An MP4 file.

0 kommentar(er)

0 kommentar(er)